Hono on AWS with SST

There are two ways to deploy a Hono app to AWS with SST.

We’ll use both to build a couple of simple apps below.

Examples

We also have a few other Hono examples that you can refer to.

Serverless

We are going to build a serverless Hono API, add an S3 Bucket for file uploads, and deploy it using a Lambda function.

Before you get started, make sure to configure your AWS credentials.

1. Create a project

Let’s start by creating our app.

npm create hono@latest aws-honocd aws-honoWe are picking the aws-lambda template.

Init SST

Now let’s initialize SST in our app.

npx sst@latest initnpm installSelect the defaults and pick AWS. This’ll create a sst.config.ts file in your project root.

2. Add an API

Let’s add a Hono API using an AWS Lambda. Update your sst.config.ts.

async run() { new sst.aws.Function("Hono", { url: true, handler: "src/index.handler", });}We are enabling the function URL for this.

Start dev mode

Start your app in dev mode. This runs your functions Live.

npx sst devThis will give you the URL of your API.

✓ Complete Hono: https://gyrork2ll35rsuml2yr4lifuqu0tsjft.lambda-url.us-east-1.on.aws3. Add an S3 Bucket

Let’s add an S3 Bucket for file uploads. Update your sst.config.ts.

const bucket = new sst.aws.Bucket("MyBucket");Link the bucket

Now, link the bucket to the API.

new sst.aws.Function("Hono", { url: true, link: [bucket], handler: "src/index.handler",});4. Upload a file

We want the / route of our API to generate a pre-signed URL to upload a file to our S3 Bucket. Replace the Hello Hono route in src/index.ts.

app.get('/', async (c) => { const command = new PutObjectCommand({ Key: crypto.randomUUID(), Bucket: Resource.MyBucket.name, });

return c.text(await getSignedUrl(s3, command));});Install the npm packages.

npm install @aws-sdk/client-s3 @aws-sdk/s3-request-presignerThen add the relevant imports. We’ll use the extra ones below.

import { Resource } from 'sst'import { getSignedUrl } from '@aws-sdk/s3-request-presigner'import { S3Client, GetObjectCommand, PutObjectCommand, ListObjectsV2Command,} from '@aws-sdk/client-s3'

const s3 = new S3Client();5. Download a file

We want the /latest route of our API to generate a pre-signed URL to download the last uploaded file in our S3 Bucket. Add this to your routes in src/index.ts.

app.get('/latest', async (c) => { const objects = await s3.send( new ListObjectsV2Command({ Bucket: Resource.MyBucket.name, }), );

const latestFile = objects.Contents!.sort( (a, b) => (b.LastModified?.getTime() ?? 0) - (a.LastModified?.getTime() ?? 0), )[0];

const command = new GetObjectCommand({ Key: latestFile.Key, Bucket: Resource.MyBucket.name, });

return c.redirect(await getSignedUrl(s3, command));});Test your app

Let’s try uploading a file from your project root. Make sure to use your API URL.

curl --upload-file package.json "$(curl https://gyrork2ll35rsuml2yr4lifuqu0tsjft.lambda-url.us-east-1.on.aws)"Now head over to https://gyrork2ll35rsuml2yr4lifuqu0tsjft.lambda-url.us-east-1.on.aws/latest in your browser and it’ll download the file you just uploaded.

6. Deploy your app

Now let’s deploy your app.

npx sst deploy --stage productionYou can use any stage name here but it’s good to create a new stage for production.

Containers

We are going to create a Hono API, add an S3 Bucket for file uploads, and deploy it in a container with the Cluster component.

Before you get started, make sure to configure your AWS credentials.

1. Create a project

Let’s start by creating our app.

npm create hono@latest aws-hono-containercd aws-hono-containerWe are picking the nodejs template.

Init SST

Now let’s initialize SST in our app.

npx sst@latest initnpm installSelect the defaults and pick AWS. This’ll create a sst.config.ts file in your project root.

2. Add a Service

To deploy our Hono app in a container, we’ll use AWS Fargate with Amazon ECS. Replace the run function in

async run() { const vpc = new sst.aws.Vpc("MyVpc"); const cluster = new sst.aws.Cluster("MyCluster", { vpc });

new sst.aws.Service("MyService", { cluster, loadBalancer: { ports: [{ listen: "80/http", forward: "3000/http" }], }, dev: { command: "npm run dev", }, });}This creates a VPC, and an ECS Cluster with a Fargate service in it.

The dev.command tells SST to instead run our Hono app locally in dev mode.

Start dev mode

Run the following to start dev mode. This’ll start SST and your Hono app.

npx sst devOnce complete, click on MyService in the sidebar and open your Hono app in your browser.

3. Add an S3 Bucket

Let’s add an S3 Bucket for file uploads. Add this to your sst.config.ts below the Vpc component.

const bucket = new sst.aws.Bucket("MyBucket");Link the bucket

Now, link the bucket to the container.

new sst.aws.Service("MyService", { // ... link: [bucket],});This will allow us to reference the bucket in our Hono app.

4. Upload a file

We want a POST request made to the / route to upload a file to our S3 bucket. Let’s add this below our Hello Hono route in our src/index.ts.

app.post('/', async (c) => { const body = await c.req.parseBody(); const file = body['file'] as File;

const params = { Bucket: Resource.MyBucket.name, ContentType: file.type, Key: file.name, Body: file, }; const upload = new Upload({ params, client: s3, }); await upload.done();

return c.text('File uploaded successfully.');});Add the imports. We’ll use the extra ones below.

import { Resource } from 'sst'import { S3Client, GetObjectCommand, ListObjectsV2Command,} from '@aws-sdk/client-s3'import { Upload } from '@aws-sdk/lib-storage'import { getSignedUrl } from '@aws-sdk/s3-request-presigner'

const s3 = new S3Client();And install the npm packages.

npm install @aws-sdk/client-s3 @aws-sdk/lib-storage @aws-sdk/s3-request-presigner5. Download the file

We’ll add a /latest route that’ll download the latest file in our S3 bucket. Let’s add this below our upload route in src/index.ts.

app.get('/latest', async (c) => { const objects = await s3.send( new ListObjectsV2Command({ Bucket: Resource.MyBucket.name, }), ); const latestFile = objects.Contents!.sort( (a, b) => (b.LastModified?.getTime() ?? 0) - (a.LastModified?.getTime() ?? 0), )[0]; const command = new GetObjectCommand({ Key: latestFile.Key, Bucket: Resource.MyBucket.name, }); return c.redirect(await getSignedUrl(s3, command));});Test your app

To upload a file run the following from your project root.

curl -F file=@package.json http://localhost:3000/This should upload the package.json. Now head over to http://localhost:3000/latest in your browser and it’ll show you what you just uploaded.

6. Deploy your app

To deploy our app we’ll first add a Dockerfile. This is building our app by running our build script from above.

FROM node:lts-alpine AS base

FROM base AS builderRUN apk add --no-cache gcompatWORKDIR /appCOPY package*json tsconfig.json src ./ # Copy over generated types COPY sst-env.d.ts* ./RUN npm ci && \ npm run build && \ npm prune --production

FROM base AS runnerWORKDIR /appRUN addgroup --system --gid 1001 nodejsRUN adduser --system --uid 1001 honoCOPY --from=builder --chown=hono:nodejs /app/node_modules /app/node_modulesCOPY --from=builder --chown=hono:nodejs /app/dist /app/distCOPY --from=builder --chown=hono:nodejs /app/package.json /app/package.json

USER honoEXPOSE 3000CMD ["node", "/app/dist/index.js"]This builds our Hono app in a Docker image.

Let’s also add a .dockerignore file in the root.

node_modules.gitTo compile our TypeScript file, we’ll need add the following to the tsconfig.json.

{ "compilerOptions": { // ... "outDir": "./dist" }, "exclude": ["node_modules"]}Install TypeScript.

npm install typescript --save-devAnd add a build script to our package.json.

"scripts": { // ... "build": "tsc"}Now to build our Docker image and deploy we run:

npx sst deploy --stage productionYou can use any stage name here but it’s good to create a new stage for production. This’ll give the URL of your Hono app deployed as a Fargate service.

✓ Complete MyService: http://prod-MyServiceLoadBalanc-491430065.us-east-1.elb.amazonaws.comConnect the console

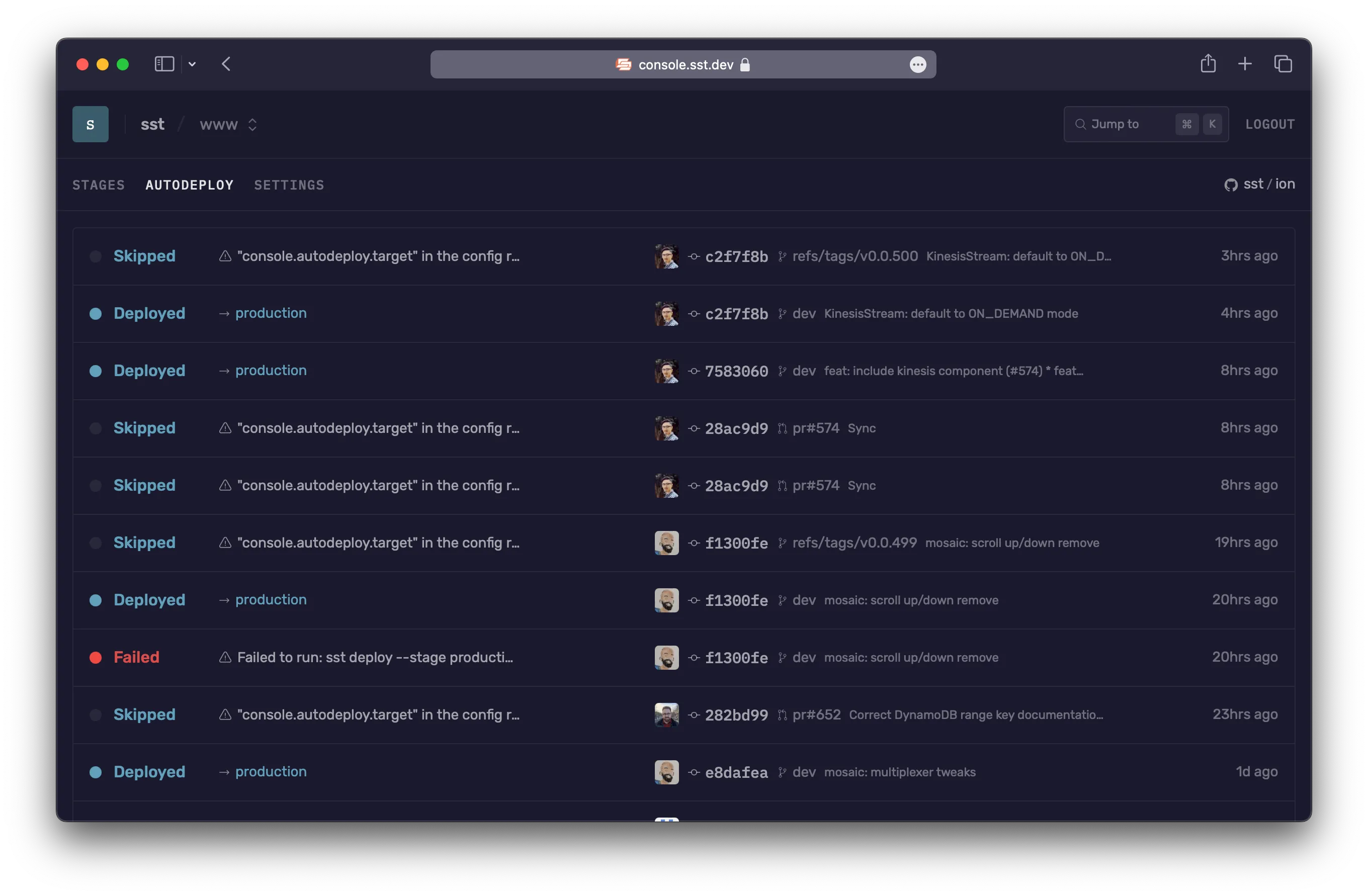

As a next step, you can setup the SST Console to git push to deploy your app and view logs from it.

You can create a free account and connect it to your AWS account.